Visual Impairment: A New Take on Navigation

Author: Gerd Grüner

What if you simply closed your eyes, blindly opened Google Maps on your smartphone, and tried to navigate from A to B? Such an attempt would surely go wrong. But for blind and visually impaired people, such limitations are part of everyday life. Can digital technologies really support users with handicaps? Can AI replace seeing-eye dogs?

Digital technologies can make everyday life easier, more comfortable, and safer for blind and visually impaired people. And the demand is there. The Paris-based European Blind Union estimates that there are more than six million blind and 24 million visually impaired people in the territory of geographical Europe. According to the Federal Statistical Office, there were about 453,000 visually impaired people in Germany on December 31, 2019. According to a definition by the German Association of the Blind and Visually Impaired, this means that the people affected have only 30 percent vision in their better eye or a corresponding reduction in their field of vision. In addition, there are around 51,000 severely visually impaired people (five percent vision) and 77,000 blind people (two percent vision).

Digital Technologies introduce New Areas of Activity for People with Disabilities

The range of aids extends from color recognition devices with voice output, over talking scales, clocks, and electronic fill level indicators for containers, to so-called Braille displays that can be connected to computers and then reproduce the screen text line by line in Braille. The OrCam MyEye, for example, is a mini-camera that can be attached to the side pieces of a pair of glasses, recognizes text on displays, signs, or books, and reads it out over an integrated loudspeaker. The device is also designed to recognize banknotes and read barcodes on packaging. Digital technologies can open up new areas of activity for people with disabilities. But can they also replace seeing-eye dogs or canes for the blind? Are there navigation systems that can reliably and safely navigate people from their home to the train station or to the restroom in a museum?

Indeed, navigation for people with visual impairments is a field in which scientists and engineers have long been pulling out all the stops. It’s no coincidence that they continuously make connections to digital navigation in the automotive industry. Here as there, the same basic questions arise: What’s the current location? What’s the destination? What’s the best route to get there? What obstacles and limitations should you expect? However, people with visual impairments need more information to navigate safely in traffic than sighted people. In any case, Google Maps wouldn’t be able to guide a blind person safely from A to B in the city – after all, the system would not provide the user with information about ground structures, level differences on sidewalks and squares, obstacles, and ground indicators. Navigation systems that are specially adapted to the respective degree of visual impairment would be the ideal solution. However, the market for these systems has been very limited so far – for the time being, blind and severely visually impaired people are therefore still dependent on seeing-eye dogs or white sticks to recognize ground obstacles.

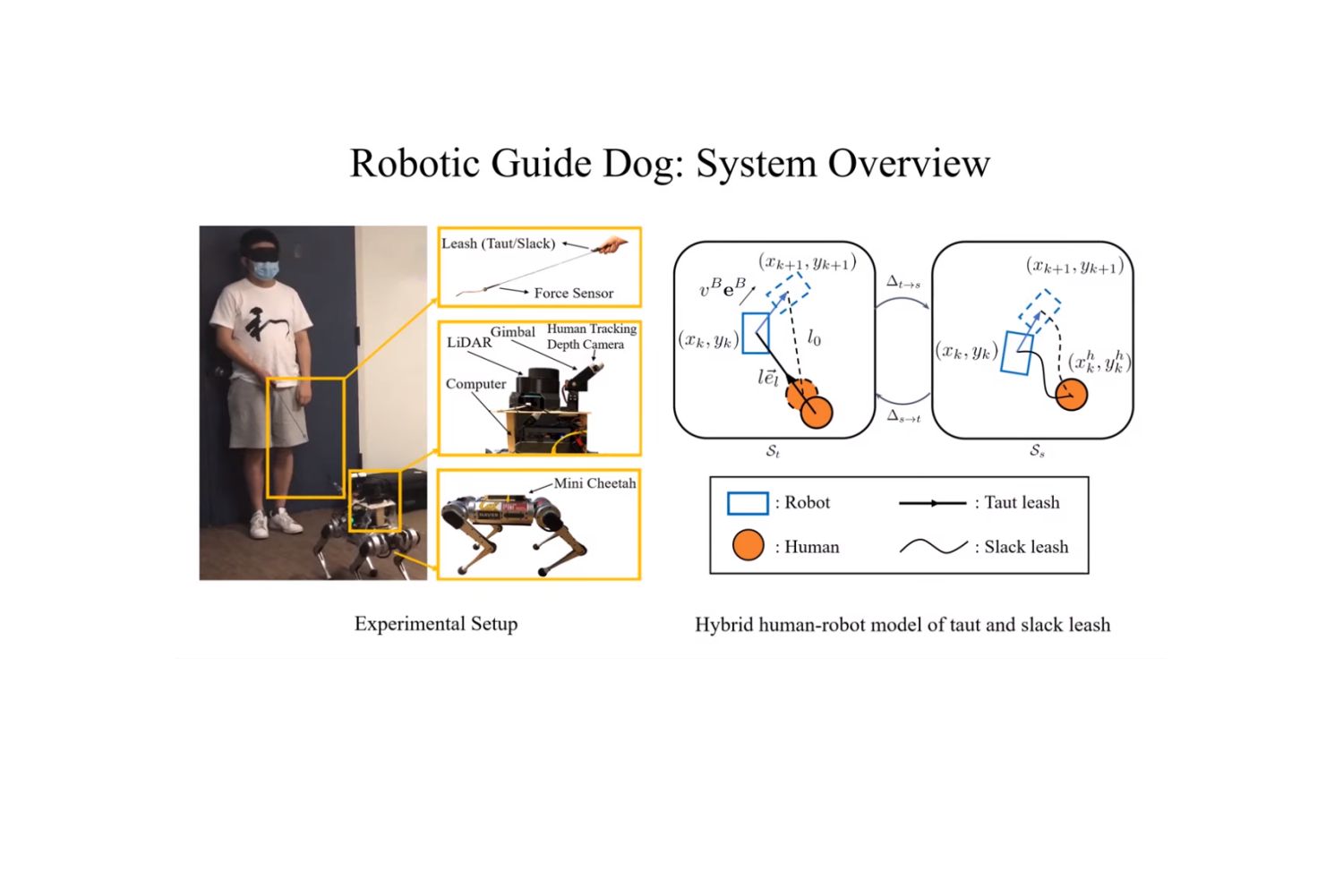

Robots and AI Drones compete with Seeing-Eye Dogs

However, resourceful scientists have long since set their sights on these standard aids. Sticks with ultrasound equipment integrated into the handle can detect obstacles at head or chest level, such as low-hanging branches or open truck loading ramps, and warn the user with a vibration signal. The long stick becomes a full-blown high-tech tool when a camera, GPS antenna, and laser technology come into play. Researchers at Stanford University in California have developed a prototype that indicates obstacles and avoidance routes either by acoustic signals, vibration, or force of motion. At Berkeley University, on the other hand, scientists are experimenting with a four-legged robot equipped with artificial intelligence and lidar sensors. It navigates visually impaired people more effectively than a flesh-and-blood dog. Thanks to self-learning algorithms, the Robo-Dog is expected to adapt better to the user day by day, becoming a near-perfect guide over time. A flying version of a seeing-eye dog was recently field-tested by scientists from China together with the Karlsruhe Institute of Technology. The user holds the AI drone on a leash while it scans the surroundings with its camera. The system recognizes walkable paths, obstacles, and traffic lights. It guides the user along the planned route and gives audio instructions via headset.

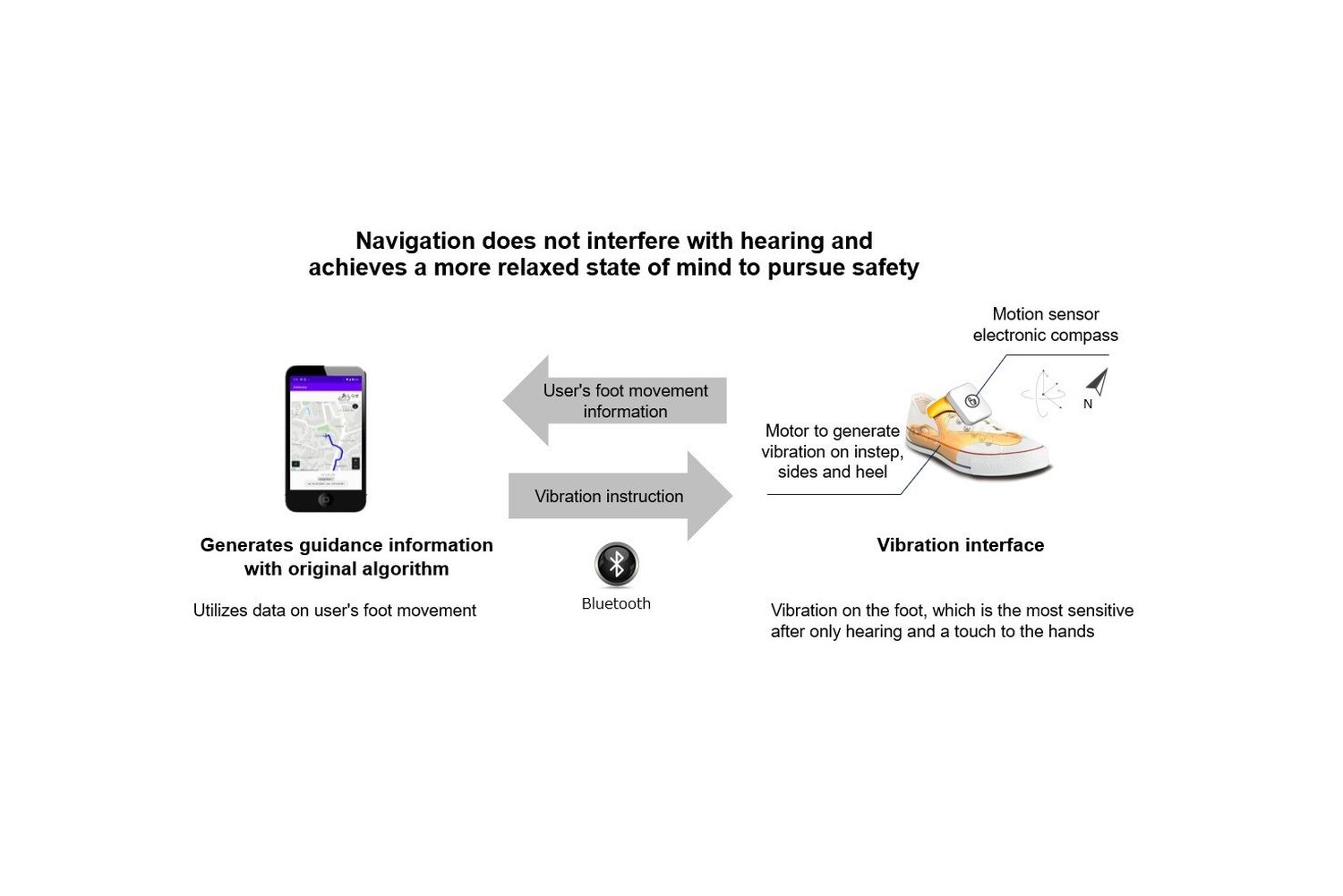

Wearables – Navigation in Footwear

Japanese start-up Ashirase is developing a navigation system integrated into shoes to help people with visual impairments walk. It consists of a smartphone app and a three-dimensional vibration device with a motion sensor placed inside the shoe. Based on the route preset with the app, the system vibrates to provide navigation instructions. If the user must go straight, a vibration is triggered on the front foot; if the user must turn right or left, a vibration is triggered on the left or right side. Lower Austrian company Tec-Innovation, in collaboration with the Institute for Machine Vision and Display at Graz University of Technology, focuses on artificial intelligence in footwear. Their current prototype is a shoe with ultrasonic sensors on the toe and an integrated camera. The system works with algorithms trained via machine learning to recognize an obstacle-free and thus hazard-free walkable area based on camera images from the foot’s perspective.

Routago – AI for Visually Impaired Pedestrians

Together with research partner Karlsruhe Institute of Technology (KIT), start-up “Routago” has developed a technology platform working with artificial intelligence to support blind and visually impaired people with tools and aids in traffic. The navigation takes street sides, sidewalks, parks, and pedestrian zones into account. It guides users to safe street crossings at traffic lights, crosswalks, pedestrian bridges, as well as underpasses. The system combines navigation, object recognition, environmental information, and individual route management. To do so, it supplements the existing geodata with an algorithmic addition specifically for pedestrians. Users hear the directions via voice-over.

NaviLens – A Digital Map for the Blind

Based in Murcia, Spain, NaviLens has developed a smartphone-based solution that makes bus travel, sightseeing, and shopping possible for people with impaired vision. At the heart of the application are special QR codes measuring around 20 square centimeters that can be recognized by smartphone camera from up to twelve meters away, including from different angles. The app recognizes the codes even without focusing. It downloads the linked information at lightning speed and outputs it to users as voice instructions. The technology is currently being used in transit systems in the cities of Barcelona, Madrid, and Murcia. New York and Los Angeles are currently testing the system in a subway station and a train station.

Indoor Navigation – everGuide from the Fraunhofer Institute FOKUS

Indoor navigation app “everGuide” from the Berlin Fraunhofer Institute FOKUS is designed to enable users to navigate safely in complex and sprawling buildings. To ensure that the app’s building data is up to date, the Fraunhofer scientists send out a robot with cameras and laser scanners to create an exact digital map. Smartphones play a key role in navigation. Acceleration and angular rate sensors, for example, are used to determine the location, recording the decrease and increase in speed, and changes in the device’s position. Signs installed in the building provide further input with special QR codes, which the smartphone’s camera automatically recognizes and are also used to determine position. The navigation software on the smartphone merges the various data and adjusts navigation. Field tests with the Fraunhofer app are currently taking place in the House of Health and Family in Berlin, in the city hall building of the North Rhine-Westphalian city of Solingen, and in the Foreigners’ Registration Office in Cologne.

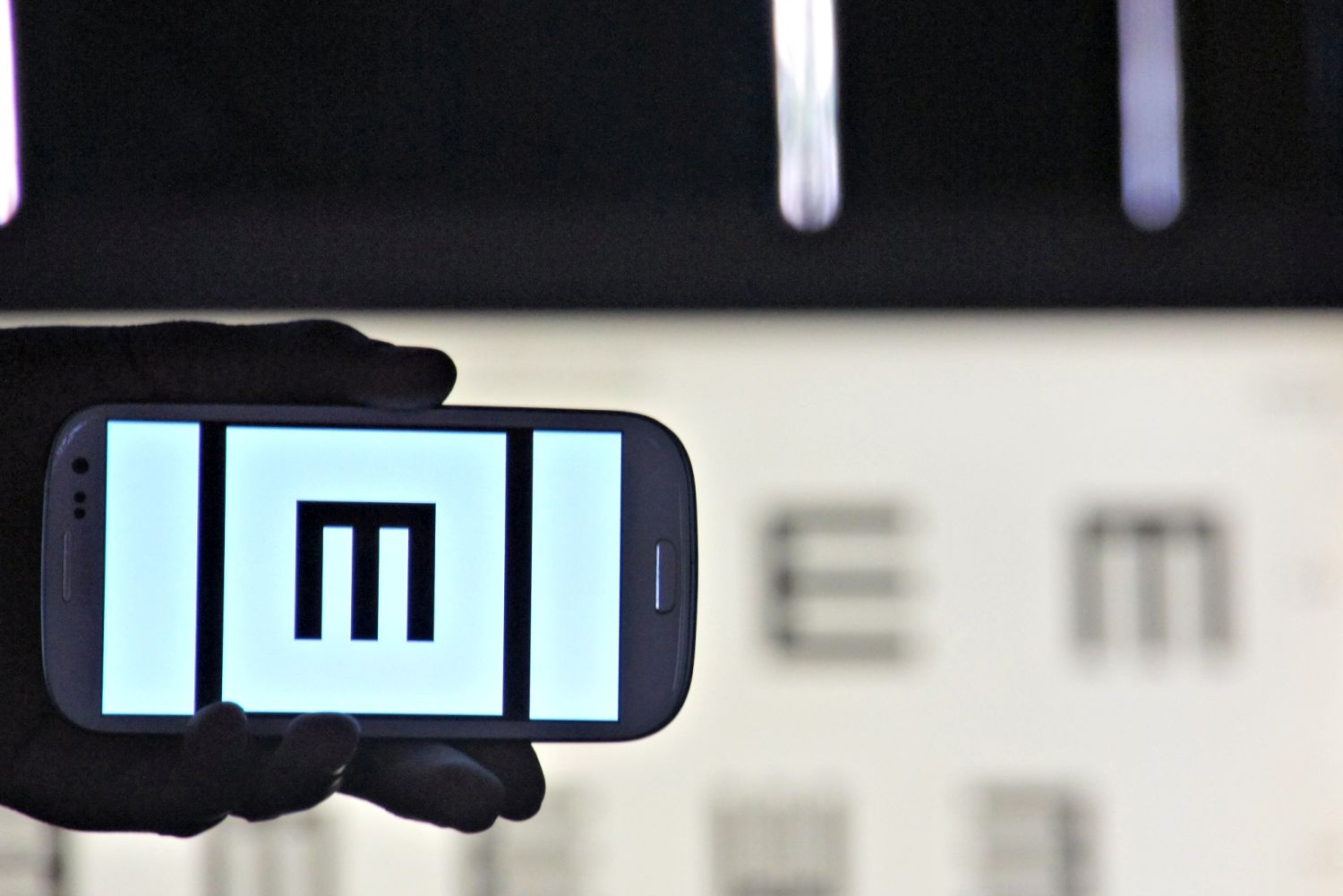

Peak Vision – Measuring Visual Acuity with Smartphones

Five years ago, British company Peek Vision launched Peek Acuity, an app that is available in more than 190 countries as a certified medical aid for checking vision. Trained laypeople can use the Peek Acuity diagnostic procedure to measure patients’ visual acuity with a simple smartphone and forward the data to doctors for remote diagnosis. To do this, the subject is shown the letter E in various orientations and sizes on the smartphone display – just like on a classic eye chart at the ophthalmologist’s office. The test person sits on a chair three meters away from the screen, covers one eye, and points their finger to indicate the direction in which the openings of the letter E point. Once the test is complete, the app displays the calculated value for visual acuity.